AI Models Master Deepfake Sentiment Analysis

New research reveals advanced techniques significantly improve detection accuracy

Researchers have developed sophisticated Artificial Intelligence (AI) models capable of accurately analyzing the sentiment behind posts discussing deepfakes on social media. These advanced models, tested on a vast dataset scraped from X (formerly Twitter), demonstrate a remarkable ability to classify sentiment as positive, negative, or neutral, offering crucial insights into public perception and the spread of disinformation.

Pioneering Feature Extraction Techniques

Novel approaches boost machine learning performance

The study implemented a range of machine learning (ML) and deep learning (DL) models, employing various feature extraction techniques. These included established methods like Bag-of-Words (BoW) and TF-IDF, alongside word embeddings and a novel “transfer learning” (TL) feature extraction method. Hyperparameter tuning and k-fold cross-validation were used to rigorously assess and verify the performance of each model.

Logistic Regression Leads with BoW Features

Key models show varied success in sentiment classification

When utilizing the Bag-of-Words (BoW) feature, Logistic Regression (LR) emerged as the top performer, achieving an impressive 87% accuracy. The study noted LR’s efficiency in handling high-dimensional data and preventing overfitting. Decision Tree (DT) and K-Nearest Neighbors (KNC) models showed moderate success with 78% and 64% accuracy, respectively. Support Vector Machine (SVM) struggled, achieving only 43% accuracy, attributed to its computational complexity and sensitivity to data variations.

TF-IDF Enhances Some Models, But LR Remains Dominant

Word embeddings unlock deeper understanding for DL models

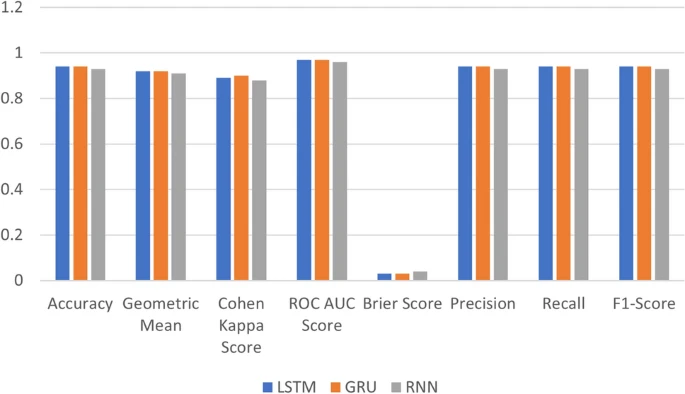

Moving to TF-IDF features, LR maintained its lead with 81% accuracy, closely followed by DT at 80%. KNC and SVM showed limited improvement, with SVM reaching only 48% accuracy. In contrast, deep learning models leveraging word embeddings achieved superior results. Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks demonstrated the most promising outcomes, reaching 94% accuracy. These models excel at capturing long-term dependencies in text, a crucial factor for nuanced sentiment analysis.

Novel Transfer Features Drive Breakthrough Performance

Hybrid LGR model achieves near-perfect sentiment analysis

The introduction of a novel transfer learning feature extraction technique, which combines features extracted by LSTM and DT models, yielded significant improvements. Logistic Regression models utilizing these transfer features achieved an outstanding 97% accuracy. Even more remarkably, a hybrid LGR model, integrating LSTM, GRU, and RNN, combined with these advanced transfer features, reached an exceptional 99% accuracy. This hybrid model significantly outperformed previous techniques across various metrics, including precision, recall, and F1 scores.

“The results demonstrate that the proposed model performs better than the previous techniques discussed earlier by achieving a 99% accuracy,” the study states. This advancement represents a significant leap forward in the ability to accurately gauge public sentiment towards deepfake content, a growing concern in the digital age. For instance, a recent study by the Pew Research Center found that nearly half of U.S. adults have seen manipulated videos or images online, highlighting the prevalence of such content (Pew Research Center, 2024).

Statistical analysis confirms model superiority

Statistical significance tests, including paired t-tests, confirmed the superiority of the proposed LGR model. Comparisons with classical ML and DL models showed statistically significant gains, with very low p-values indicating that the observed performance improvements are not due to random chance. The analysis indicated that the hybrid LGR model consistently and significantly outperforms other architectures.

Addressing Limitations and Future Directions

While the research highlights significant progress, certain limitations were acknowledged. The dataset, primarily sourced from X (Twitter), is imbalanced and does not represent the global population, potentially leading to biased learning. Furthermore, the high computational cost of the LGR model currently limits its real-time application.

Future work aims to incorporate multimodal data (visuals, audio) into sentiment analysis, integrate sarcasm detection, explore time-series sentiment analysis as awareness of deepfakes grows, and develop cross-lingual models for global monitoring. The integration of explainable AI techniques is also proposed to enhance the interpretability of sentiment analysis results for deepfake tweets.

Ethical considerations guide research

The study adhered to ethical research practices, ensuring individual privacy by using publicly available tweets and anonymizing all data. Metadata such as geotags and user handles were excluded from the manuscript to further protect user privacy. The research acknowledges potential data bias and the need for cautious interpretation of findings, emphasizing the importance of human-centered AI with risk-aware interpretations, especially when dealing with sensitive topics.