AI Reasoning Models Hit a Wall, Questioning Path to True Intelligence

Apple Research Reveals ‘Complete Accuracy Collapse’ in Complex Problem Solving

Despite rapid advancements, leading artificial intelligence systems struggle with even moderately challenging puzzles, casting doubt on the near-term prospect of human-level AI. New research indicates a fundamental limitation in current reasoning approaches.

Study Highlights Reasoning Limits

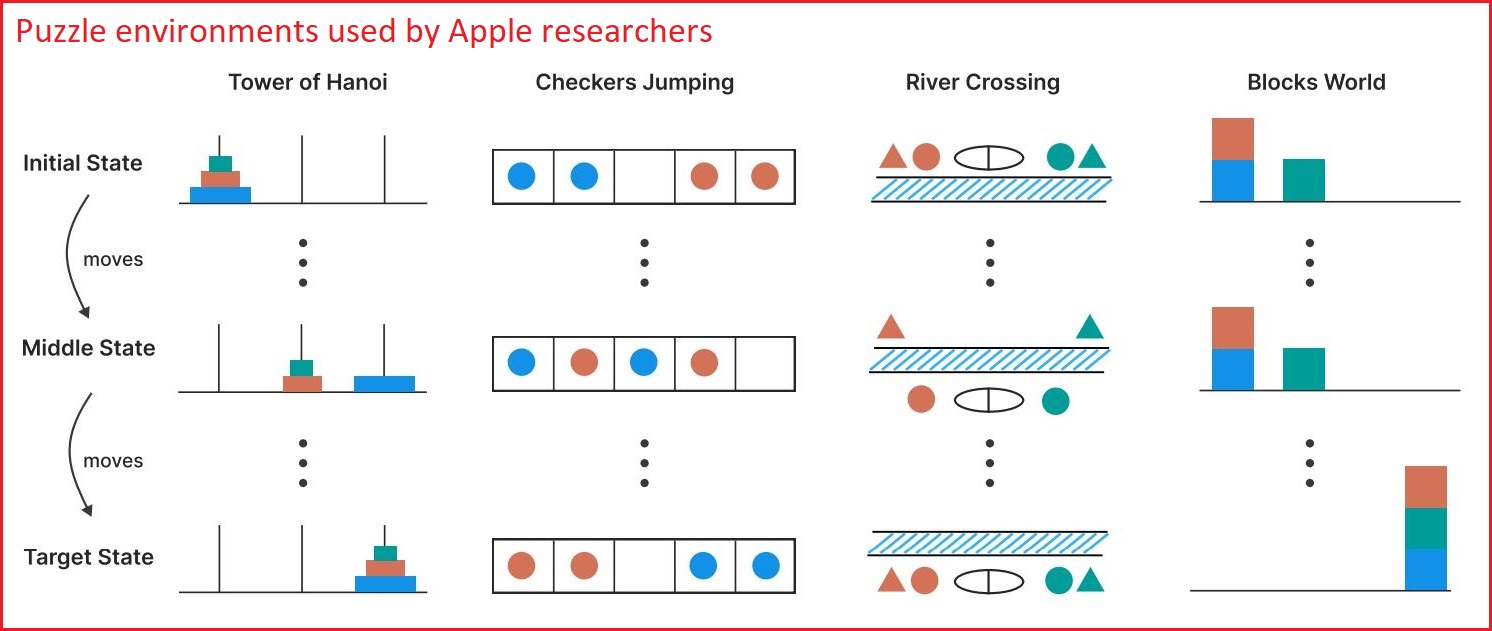

Researchers at Apple conducted tests on advanced AI reasoning models, including OpenAI’s o3-mini, DeepSeek’s R1, and Anthropic’s Claude 3.7 Sonnet. They discovered that while these models excel at simpler tasks, their performance dramatically declines—experiencing what the team termed a “complete collapse”—when faced with complex problems like the Tower of Hanoi or river crossing puzzles.

The study, titled The illusion of thinking, found that even providing the models with solution algorithms didn’t improve their ability to solve these puzzles. This suggests a deeper issue than simply a lack of knowledge.

“These insights challenge prevailing assumptions about LRM capabilities and suggest that current approaches may be encountering fundamental barriers to generalisable reasoning.”

—Apple Researchers, ‘The Illusion of Thinking’

The findings align with growing skepticism about the hype surrounding large language models (LLMs). A recent report by Gartner estimates that 80% of generative AI projects will fail to meet expectations by 2026 due to unrealistic goals and a lack of understanding of the technology’s limitations (Gartner, February 2024).

Expert Weighs In on AGI Prospects

Gary Marcus, an academic known for his critical stance on AI overpromising, believes the Apple study significantly diminishes the likelihood of achieving artificial general intelligence (AGI) with current LLM architectures. He argued that the results were “pretty devastating to LLMs.”

Marcus elaborated, “What this means for business and society is that you can’t simply drop o3 or Claude into some complex problem and expect it to work reliably.”

He cautioned against expecting LLMs to fundamentally transform society anytime soon.

OpenAI Remains Optimistic About Future Advances

Despite the Apple research, Sam Altman, CEO of OpenAI, maintains a more optimistic outlook. In a recent blog post, he stated that humanity is “close to building digital superintelligence,” anticipating breakthroughs in AI’s ability to generate novel insights by 2026 and deploy robots capable of real-world tasks by 2027.

Altman also highlighted the rapid pace of AI development, noting that current progress exceeds predictions made just a few years ago. Meta is reportedly preparing to launch a new AI lab focused on developing superintelligence, further fueling the race towards advanced AI.

While the path to AGI remains uncertain, the Apple study serves as a crucial reminder of the significant challenges that lie ahead in building truly intelligent machines.