featured

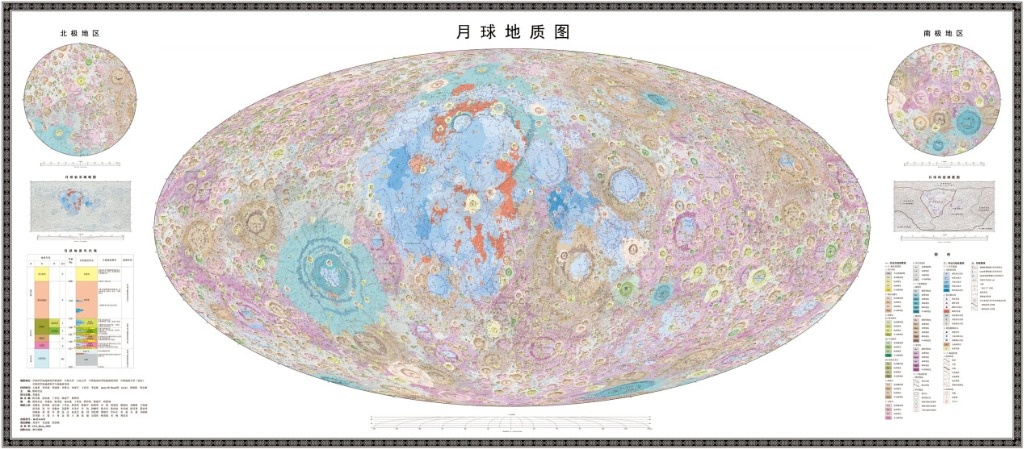

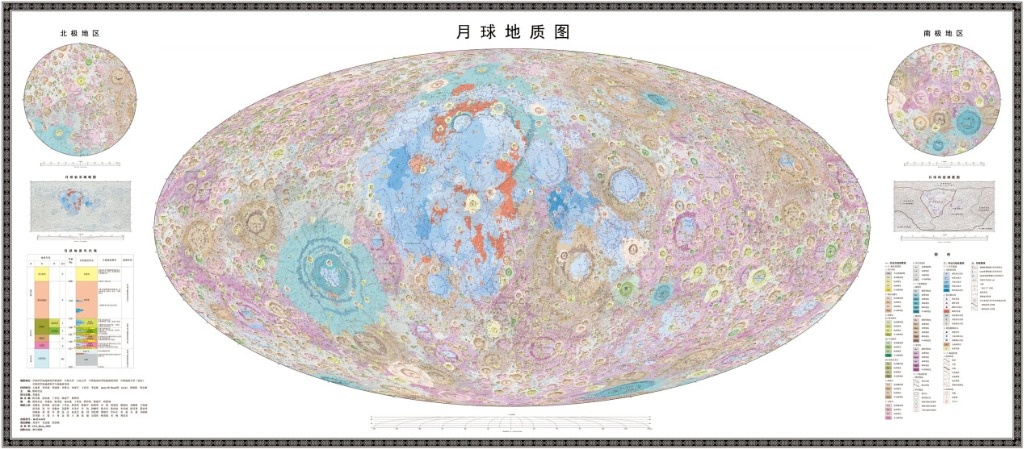

China published the atlas of the Moon with the highest precision and high definition in the world

Beijing. China published the world’s first set of maps of the Moon’s surface with the highest precision and high definition. Based on scientific studies, it provides basic cartographic data for future research and exploration on …

China published the atlas of the Moon with the highest precision and high definition in the world

Beijing. China published the world’s first set of maps of the Moon’s surface with the highest precision and … Read more

Food sovereignty and climate crisis, SIAM in the eye of the storm

Photo credits: Ahmed Boussarhane/LNT SIAM is above all a professional exhibition. This implies that for the many exhibitors, … Read more

Judiciary sentences soccer coach to 14 years in prison for inappropriate touching of a minor

Luis Pinche García was sentenced for inappropriate touching of a minor under 13 years of age. (Photo: JUDICIAL … Read more

Industrial shipments start 2024 on the right foot according to ADEX

Peruvian manufacturing exports amounted to US$ 506 million 406 thousand in January of this year, reflecting an increase … Read more

Valkenburg Amusement Park Fined €6 After Gondola Accident: Public Prosecution Service Decision

Orange MediaCalidius Balloon Tower, where the accident happened in 2022 In association with L1mburg NOS news•today, 6:32 p.m … Read more